|

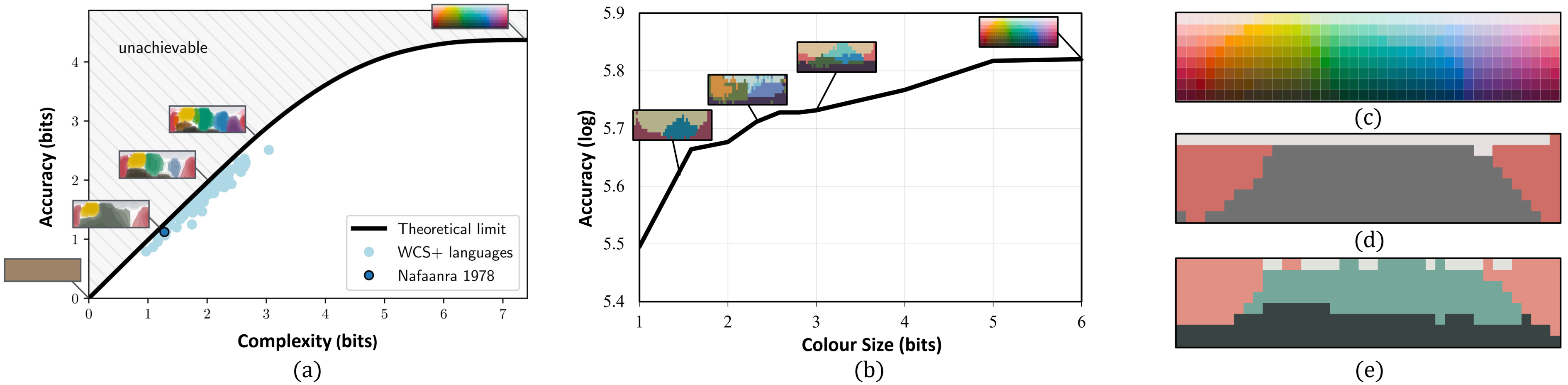

The long-standing theory that a colour-naming system evolves under dual pressure of efficient communication

and perceptual mechanism is supported by more and more linguistic studies, including analysing four decades

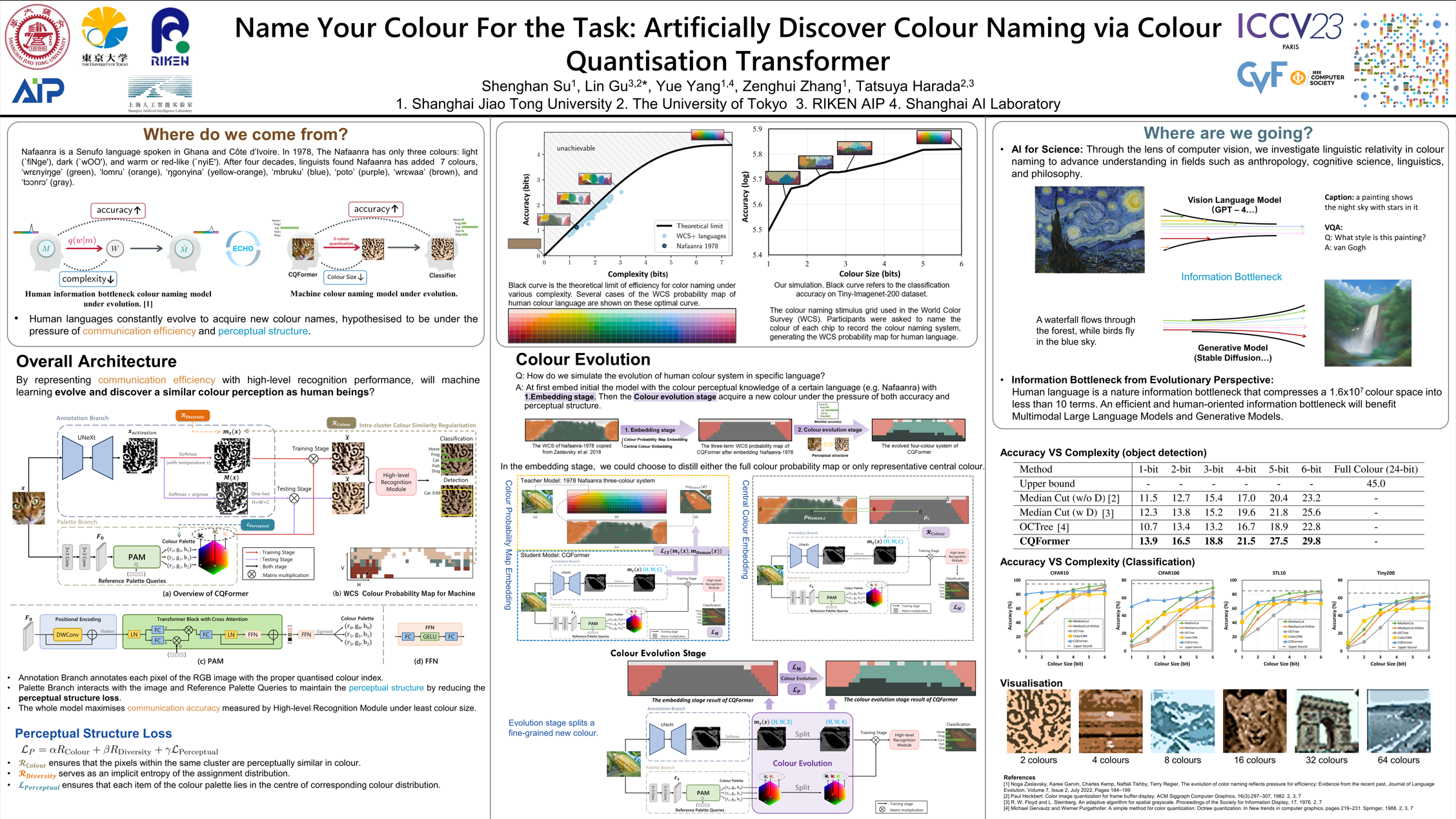

of diachronic data from the Nafaanra language. This inspires us to explore whether machine learning could

evolve and discover a similar colour-naming system via optimising the communication efficiency represented

by high-level recognition performance. Here, we propose a novel colour quantisation transformer, CQFormer,

that quantises colour space while maintaining the accuracy of machine recognition on the quantised images.

Given an RGB image, Annotation Branch maps it into an index map before generating the quantised image with a

colour palette; meanwhile the Palette Branch utilises a key-point detection way to find proper colours in

the palette among the whole colour space. By interacting with colour annotation, CQFormer is able to balance

both the machine vision accuracy and colour perceptual structure such as distinct and stable colour

distribution for discovered colour system. Very interestingly, we even observe the consistent evolution

pattern between our artificial colour system and basic colour terms across human languages. Besides, our

colour quantisation method also offers an efficient quantisation method that effectively compresses the

image storage while maintaining high performance in high-level recognition tasks such as classification and

detection. Extensive experiments demonstrate the superior performance of our method with extremely low

bit-rate colours, showing potential to integrate into quantisation network to quantities from image to

network activation.

|